Using AI to Power-Charge Your Memory Palace

Turn words into unforgettable images with AI

Published on

Part of the series: Illustrating Memory Palaces with AI

Context: What This Adds Beyond Anki

In previous articles on Memory Overflow I explained why a memory palace works, how I build one, and how I populate it with foreign-language vocabulary. The key difference from Anki—whose benefits I've extolled and whose drawbacks I've bemoaned—is agency. A palace demands my active choice at every stage: the familiar place I walk, the cross-linguistic puns I forge, the improbable scenes I stage. Anki schedules; I compose, place, and rehearse.

I am far from being hostile to technology. Arts of memory have been refined for millenia, but nothing prevents us from testing modern aides. The biggest gains, in my experience, occur with visualization—both of the frame (loci) and of the scenes (the emblematic micro-dramas encoding words).

Loci, Stabilized: A Simple Gallery

A mere phone is enough to photograph the stations (loci) along my route—say, the Jardin des Plantes where I place my scenes. This yields a gallery I can revisit to stabilize fragile spatial memory. With photos, I keep a clean index of scenes and places and refresh the exact look of each stop. Memory often preserves only a faint, shifting trace; re-seeing the successive parts of the set makes review faster and surer. Later, when I describe more advanced workflows, this album will prove even more useful.

Scenes, Upgraded: Why I Now Use AI

Successful palace work hinges on dual visualization: the backdrop and the emblematic scenes that encode meaning and sound. Until very recently, getting faithful illustrations for such symbolic, composition-heavy scenes meant either drawing well myself or hiring an artist. Now the obvious solution is AI.

I have tested leading generators regularly. Only from the gpt-image-1 era onward (March–April 2025) did I consistently obtain faithful renderings of complex prompts where each element may defy common sense. I painted around fifty scenes with Ideogram 3.0, released roughly at the same time, slightly behind on average quality but serviceable. I’m writing in August 2025; any model with a similar Elo score on Artificial Analysis would likely perform as well.

Why I Needed This: Limits of Pure Mental Imagery

If a real scene I once saw fades quickly, a constructed mental image is even harder to hold with precision. People’s ability to visualize at will surely varies and can probably improve. My own experience is blunt: I cannot, eyes closed, sustain a scene as precise and vivid as a remembered painting. For almost a thousand Sanskrit words I tried—what resulted were ethereal clichés, thin as vitreous floaters that dissolve at a glance. Hence my search for an external visualization support for each emblem.

Before Palaces: What Images Already Did for My Anki Decks

Long before I embraced palaces, I discovered that illustrating every card helped a lot in Anki: higher recall, less arid review, and better separation of synonyms. This was pre-current-AI. I trawled museum catalogues, image repositories, and even sociolinguistic corpora looking for workable matches: “force” got Hercules; “treachery,” Iago. It was laborious and brittle, of course. Often I drew a blank because my cultural memory failed me, or because no artist had painted exactly what I needed.

A Simple Pipeline for Cards (That Evolved into Scenes)

When the first promising models appeared, I adopted a low-friction pipeline for Anki: use AI to draft detailed prompts for images illustrating each term, then feed those prompts to a generator (Ideogram or similar). Early fidelity was awful, but good enough for my single-word cards. Here is one relic from my teratology shelf, made with Stable Diffusion on 21 March 2023:

It is astonishing that, in two years, we went from this tangle of barely legible strokes to photorealistic images and videos. Less noted, but crucial for palace work, is a second axis of progress: strict adherence to user intent and spatial composition. That is what now lets me depict multi-emblem scenes reliably.

From Single Emblems to Paradoxical Tableaux

It is one thing to illustrate the single word on a flashcard; it is another to assemble half a dozen odd emblems—each phonetic, each semantic, all interacting—without omissions or distortions. Modern models finally make this feasible.

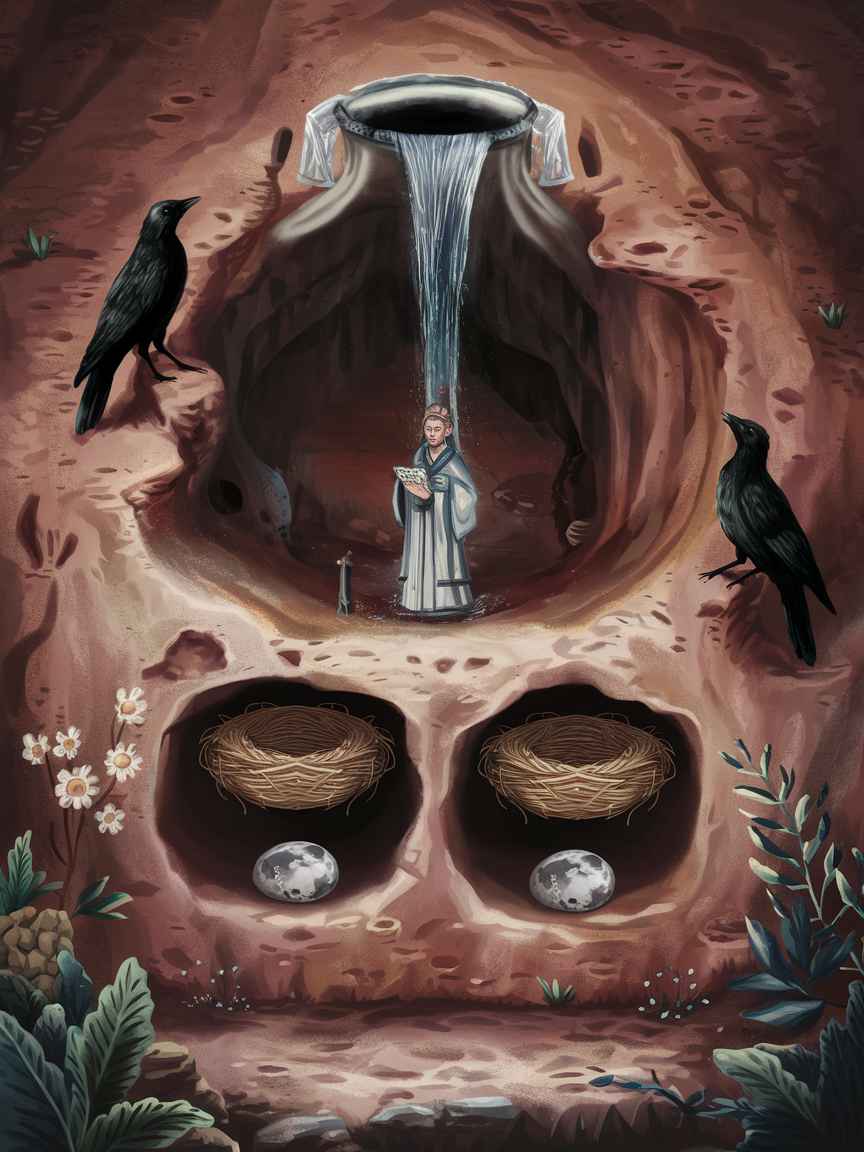

Take the five Sanskrit words I used in the previous article. The associated emblems—two of which I decoded earlier—are:

- Two little identical nests under a hollow udder.

- A black crow imitates another one above.

- In a deep hollow cave a chanter recites a hymn.

- From a water jar a waterfall pours down into that deep cave.

- In a little niche in a rock wall, a small scale statue of the moon.

Feeding this to Ideogram 3.0 (available since spring 2025) yields:

All the emblems are there—the two crows and the chanter deep in the cave—cleanly drawn and legible as a whole. Even interactions that defy common sense (the cave ending in a jar neck) are rendered clearly enough to memorize. Ideogram doesn’t read my mind, and this isn’t the exact scene I first imagined, but it is persuasive and perfectly usable as a visualization support.

What Changed Between 2023 and 2025

- Isolated images: By 2023–early 2025, top models were already excellent at single images.

- Compositions: Since the gpt-image-1 era, they also handle contrived compositions with multiple, spatially constrained elements—precisely what palace scenes require.

- Practical effect: The former shadowiness of purely mental emblems ceases to be a weakness. I now externalize scenes once, then rehearse from memory, with the images serve as training wheels.

Workflow I Actually Use Today

- Index the route. Photograph the loci; name each station; keep an index.

- Draft emblems. For each word, fuse sound and sense; write a prompt. If it enforces placement and relations, all the better, otherwise AI will most likely come up with a convincing output.

- Generate & select. Gpt-image-1 will probably one-shot the task, if it doesn't keep going until you end up with a composition-faithful rendering. Consider clarifying your prompt.

- Attach to locus. File the image under its station; one glance locks the scene.

- Wean off. After a few reviews, I close the gallery and walk the palace unaided.

Where This Leaves Anki

I still value Anki for certain pipelines, but its unit is the atomized card. Palaces let me recite an ordered inventory and strengthen links on the fly. AI-assisted imagery closes the last gap: I no longer struggle to visualize complex tableaux with sufficient sharpness. The result is an inner map I can navigate, instead of a system that monitors me.

What Comes Next

In the following articles of the series, I will show how I vary styles, palettes, and genres, and how I overlay scenes onto photographs of the loci to deepen spatial anchoring and accelerate learning.