Visualizing Vocabulary with AI for Perfect Recall: Reconciling Anki with Memory Palaces

A workflow to turn loci into Anki-ready flashcards, bolstered by palace structure

Published on

Part of the series: Blending Flashcards & Memory Palaces

Why cross Anki with loci now

In my previous piece on the hybrid I’m after, I argued that Anki’s timing and a memory palace’s structure are complementary. I want the scheduler’s discpline and regular quality checks, plus the spatial cohesion I get from loci. The method below is my current best attempt at that union.

Flashcards, as such, lack structural cohesion. Each review is an isolated diode blinking in the dark—no map, no neighborhoods, like a neurone in a synapse-less brain. I want every card to light up a region of the lexicon anchored in a place I can revisit at will. Instead of offloading the entire lexicon to a machine, I keep the whole inside me and let Anki run spot checks.

The constraint: no keyword method

For this pass I deliberately drop the keyword method (cross-lingual rebuses). It reveals too much on the front of an Anki card and, because such rebuses are surreal by design, they fracture during segmentation. I keep only the signified (meaning) in the illustration and ignore the signifier (sounds).

Starting point: an alphabetical lexicon

While reading Modern Greek I collected several hundred items, then sorted them alphabetically. The first five illustrate the workflow:

- άβγαλτος/wTsl: inexperienced (e.g., green, naive)

- αγαλλίαση/wTsl: exultation, jubilation, rapture

- αγιάζι/wTsl: hoarfrost, white frost

- αδράχνω/wTsl: to seize, grab

- ακκισμός/wTsl: affected manners, coyness

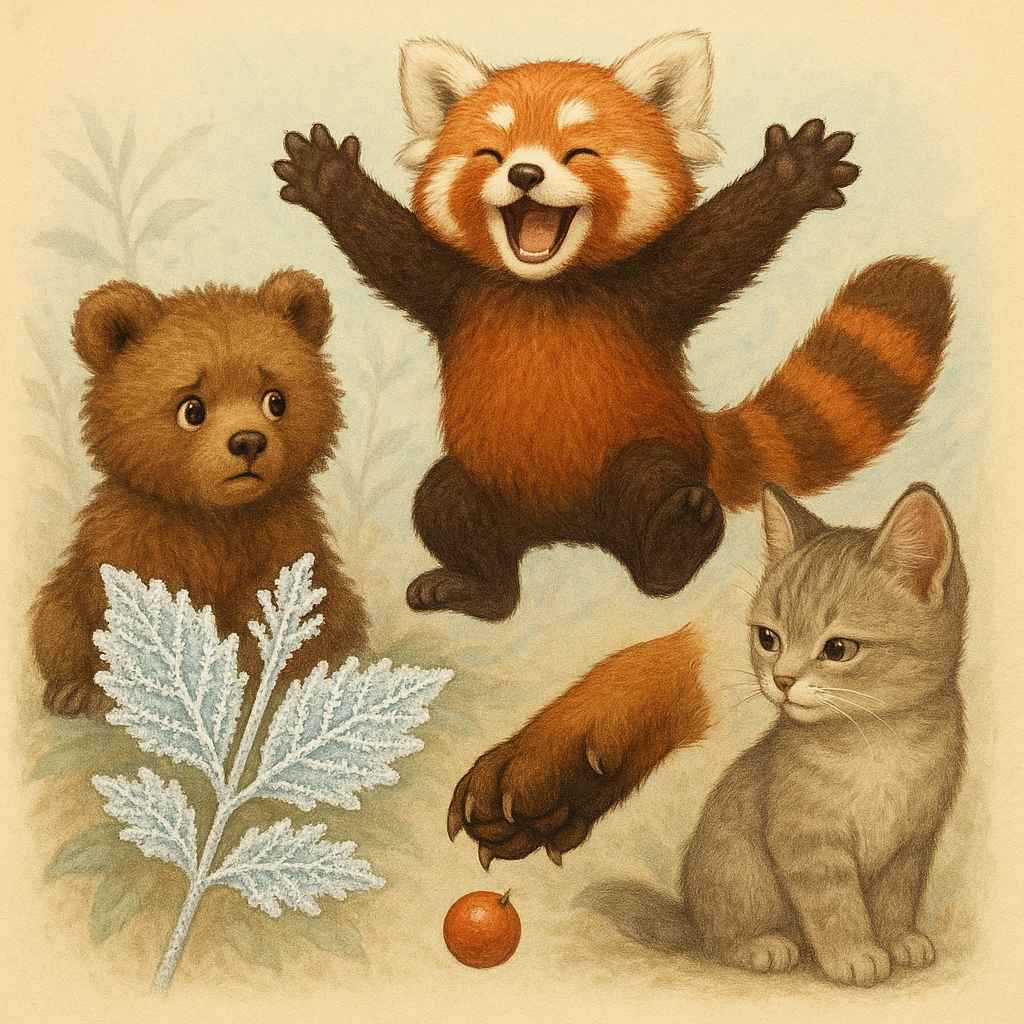

For variety and segmentation readiness, I first asked a model to propose one concrete emblem per word (no overlap, no abstraction), with a light sprinkling of “cute animals” to make repeated reviews more pleasant—and this post more palatable! The output reads:

- άβγαλτος/wTsl: inexperienced (e.g., green, naive)/prompt: A very young, fluffy bear cub, looking puzzled and inexperienced.

- αγαλλίαση/wTsl: exultation, jubilation, rapture/prompt:A red panda in pure exultation, mid-air, arms outstretched.

- αγιάζι/wTsl: hoarfrost, white frost/prompt:Close-up of delicate white frost crystals coating a single leaf.

- αδράχνω/wTsl: to seize, grab/prompt:A fox’s paw caught mid-air, about to seize a falling berry.

- ακκισμός/wTsl: affected manners, coyness/prompt:A kitten with affected manners, turning away with a coy expression.

Compose one scene per five words

I then ask an image model to compose a single scene where all five emblems are present, distinct, and non-overlapping—explicitly optimizing for downstream mask segmentation.

Why a five-word tableau? It’s dense enough to be efficient, yet simple enough that each emblem remains locatable and maskable.

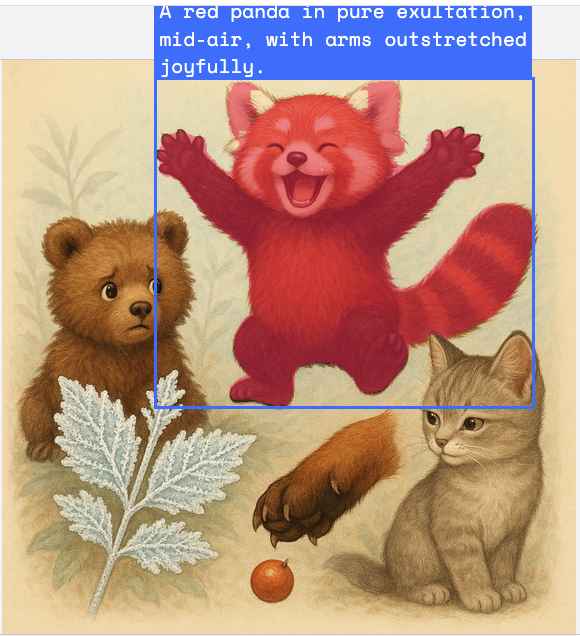

Segment with natural language masks (Gemini 2.5)

Recent vision-language models will cut segmentation masks from a plain-English description (“isolate the mid-air red panda with outstretched arms”). I reuse the exact five micro-prompts above as mask queries to retrieve five masks—one per emblem—via Google’s Spatial Understanding demo or the API. The output is crisp:

This conversational segmentation—as Google’s team calls it—removes the need for training a custom model and keeps the pipeline scriptable end-to-end. I'm grateful to Simon Willison for his July article on that topic.

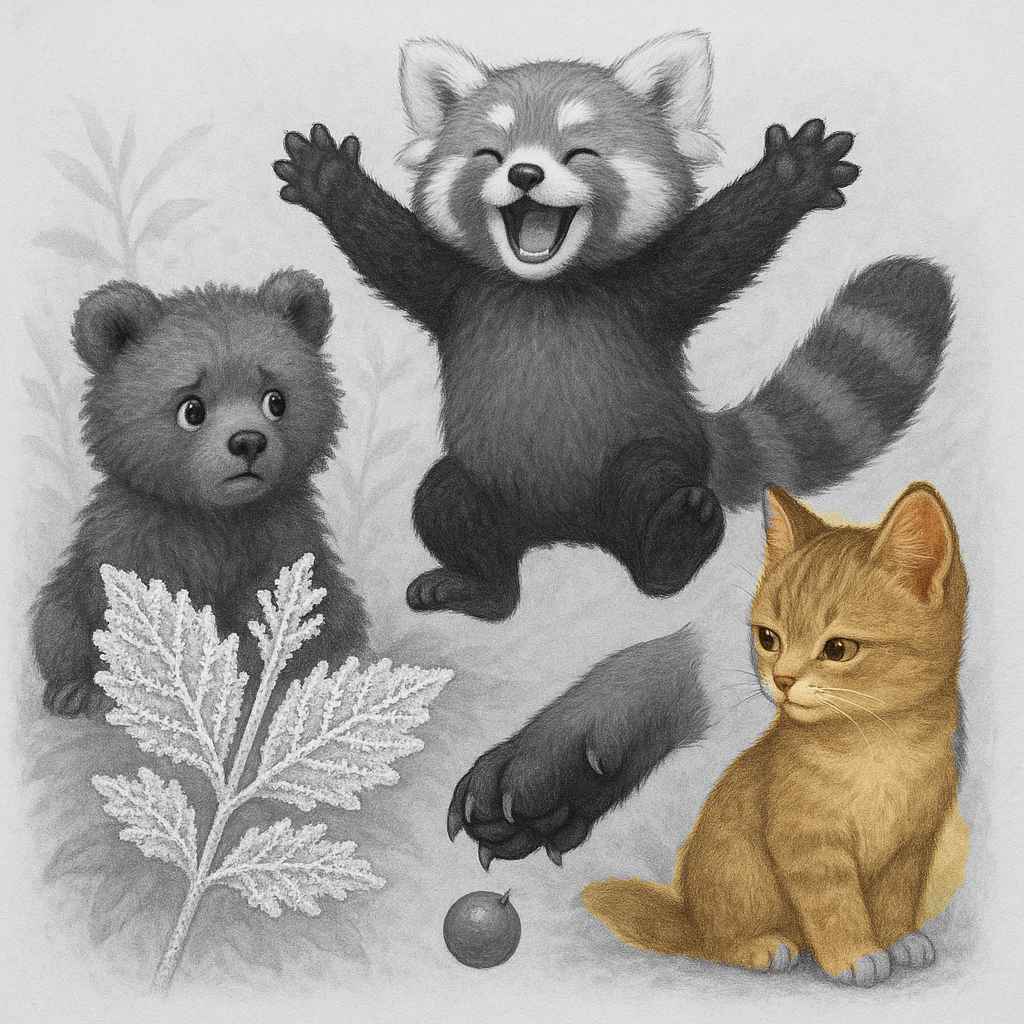

From masks to cards: one highlighted emblem per image

With the masks in hand, a small script applies each mask to the original scene, spotlighting one emblem and softly desaturating the rest. That yields five per-word illustrations from a single source image. Example for ακκισμός (“affectation”):

Why not reuse the same full scene for all five words? Because identical fronts create cripling interference. In my experience across ~10,000 cards, repeated imagery blurs distinctions: when one illustration stands for many words, they all spring to mind at once, making it hard to retrieve the right answer. Masking preserves distinctiveness, and the common scene still provides contextual, spatial grounding.

Importing into Anki: minimal, robust fields

I import each item with:

- Front: image (highlighted emblem) + audio (TTS for pronunciation)

- Back: lemma + concise gloss from an authoritative dictionary.

I keep active-recall direction (L1 → L2) for production strength and let Anki pace reviews. The palace delivers structure; Anki probes stability. For a deeper dive on my Anki design and rationale, see my earlier notes on SRS.

Where the palace comes in

Once I’ve minted twenty-five cards (five scenes × five words), I place their parent scenes as a “lasagna” on a single station in the palace: five narrow strips fused into one overlay on the locus photo. Before turning to Anki, I rehearse a lot: scan the strip collage, name each emblem, and walk the station in sequence. The overlay/strip method is my answer to the scarcity of loci in my favorite palace, the botanical garden; it compresses five scenes into one place without muddling recall.

The review loop that actually holds

- Daily: walk the newest palace regions to consolidate the spatial map.

- Scheduled: let Anki interrogate a fragment of the whole; each image teleports me to the exact locus cluster.

- Weekly: a fast palace sweep recites the relevant stretch in order, shoring up links Anki doesn’t test.

I’ve laid out the case for palaces as a cure to decontextualized recall elsewhere; this adds a practical mesh between the two systems.

Great gains

This workflow does three things at once: keeps scenes cohesive, yields per-word card fronts without interference, and anchors Anki reviews in a spatial map I actually own. The palace carries the sequence; Anki times the checks. Together, they finally cooperate.